Echocardiography AI

Our AI collaborative's first paper is out in @circimaging! We trained an AI to perform measurements of parasternal long axis #echofirst images, using expert opinions from cardiologists and physiologists from 17 hospitals across the UK.

But hasn't this been done before? Well, yes... but not quite like this. Not only did we train our AI using many experts, but validated the AI on videos, all graded by 13 experts. We therefore know how well the AI performs in the context of normal, healthy, human disagreement.

So how good is the AI? For each case we calculate a "consensus" measurement across the 13 experts as a gold standard. Then we check how closely measurements by (1) the AI, and (2) the individual humans were to this. And the AI was better, despite the humans setting the standard.

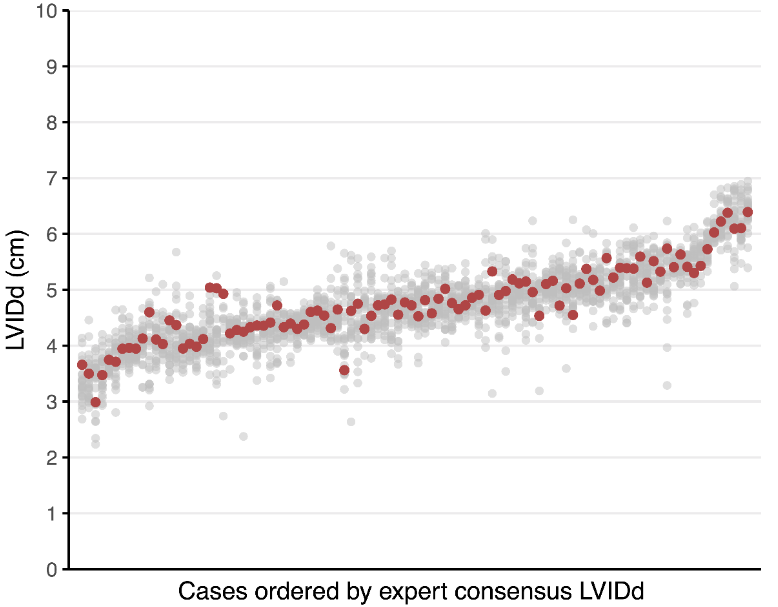

The AI seems to do an excellent job of mimicking an "average" expert. If we plot each of the 100 example videos on the x-axis, and the measurements on the y axis, the AI's measurement (red dot) tends to fall nicely inside the spread of the experts (the grey dots) for each scan.